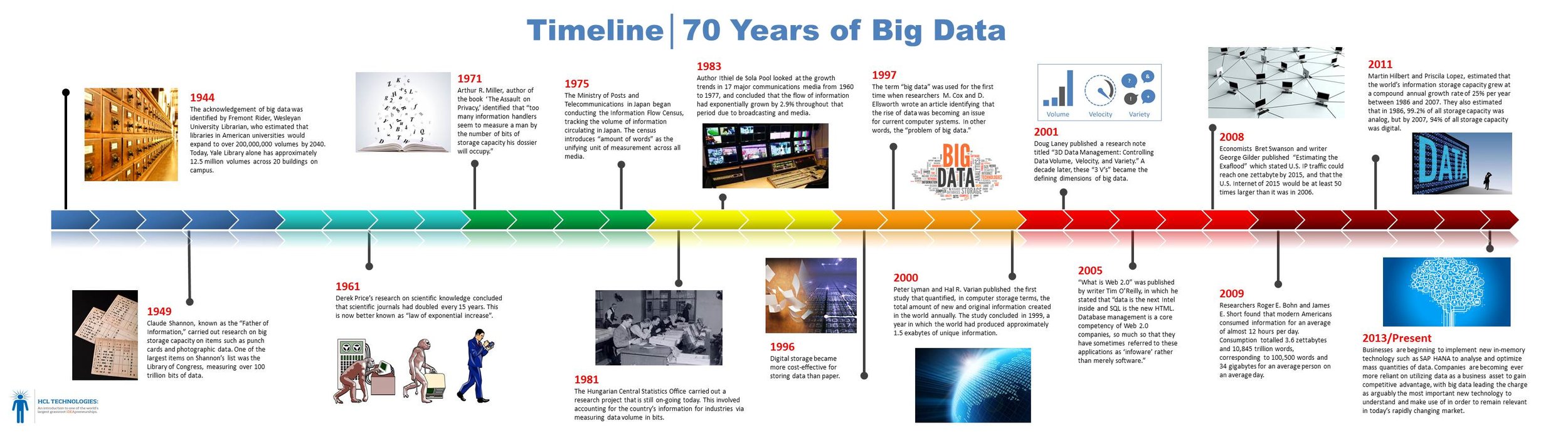

BIG DATA'S BIGGEST ACCOMPLISHMENTS

A Timeline of Big Data’s Big Moments in History

1965: The U.S. Government built the first federal data center to store over 742 million tax returns and 175 million sets of fingerprints, marking the beginning of the electronic data storage era.

1989: Author, Erik Larson is credited with publishing the first official use of the term “Big Data” in an article for Harper’s Magazine.

1995: The first super-computer is built. Data storage begins to pile up in the form of Microsoft Excel documents.

Image source: http://www.npr.org/2011/06/19/137280862/the-first-supercomputer-vs-the-desk-set

1997: The term “big data” was used for the first time in an article published by NASA. This was also the first time the “problem of big data” was addressed.

2001: Gartner analyst Doug Laney defined the three characteristics of big data: volume, velocity, and variety.

- SAS

2002: Digital data storage surpasses non-digital storage for the first time, with 94% of information storage capacity being digital.

Image source: Kleiner Perkins

2007: The term “big data” reaches mainstream media.

2011: McKinsey predicts there will be a major demand for data scientists by 2018, but not enough supply.

Image source: McKinsey

2015: Large-scale data breaches – which have increased in size and frequency exponentially over the last decade – show no signs of abating. The US Government starts the year by announcing that details of four million of its employees have been stolen from its Office of Personnel Management.

- Forbes

2016: Huge increase in data volume emerged as represented by the surge in Data Cloud Services such as Amazon Web Services, Rackspace and Azure, among other providers.

2017: Urban Science outperforms the automotive industry’s biggest data companies with the fastest, most accurate sales and suppression data in existence. With 99.7% coverage of the U.S. auto industry sales data, dealerships now have the power to immediately optimize the performance of all marketing campaigns while outperforming their competitors with the use of unprecedented, near-real time data science.

1988: IBM defines the need for high-quality, complete and accurate data warehousing and storage.

1990’s: John Mashey, Chief Scientist at Silicon Graphics is regarded as the “father of big data,” responsible for popularizing the term within the high-tech community.

1996: Digital storage becomes more cost-effective option for storing data over paper. More companies switched to digital files, allowing analytics to take hold much more quickly.

1996: General Motors became the first automaker to introduce the idea of a “connected car” with OnStar included in select Cadillac models.

1999: The term “big data” as we define it today first was first published on paper in the Communications of the ACM. The authors emphasized, “The focus of using big data should be on finding hidden insights and not so much on how much of it exists.”

2006: Hadoop was created to solve the explosion of big data.

Eric Schmidt

2010: Eric Schmidt, executive chairman of Google, tells a conference that as much data is now being created every two days, as was created from the beginning of human civilization to the year 2003.

2013: Big data leads the charge as “the most important new technology to understand and make use of in order to remain relevant in today’s rapidly changing market.” - IDG Communications, Inc.

2014: Audi becomes the first automaker to offer 4G LTE Wi-Fi Hotspots access. Mobile usage surpasses desktop for the first time. 88% of business executives surveyed by GE say big data is a top priority for their business.

2016: newly released findings from the Breach Level Index (BLI), there were 974 publicly disclosed data breaches in the first half of 2016, which led to the successful theft or loss of 554 million data records.

BIG DATA'S BIG FUTURE

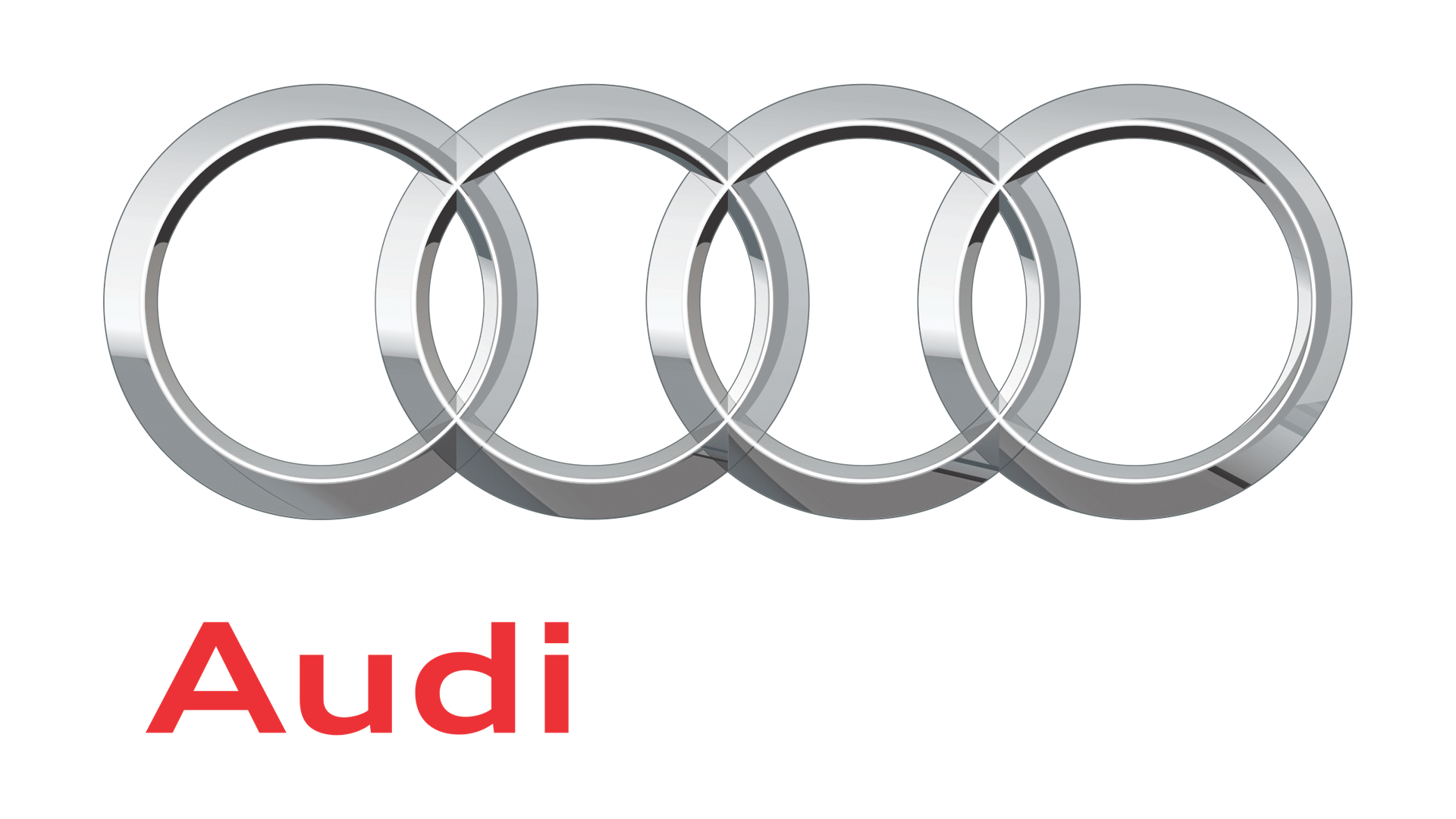

2020: Machina Research expects 90% of new cars will feature built-in Internet connectivity by the year 2020.

2045: It will be impossible for a driver to impact another vehicle or drive off the road without the serious intention of doing so,” says Scott McCormick of the Connected Vehicle Association.

FULL ARTICLE HERE - http://articles.sae.org/15067/

ADDITIONAL RESOURCES....

FULL ARTCLE HERE - https://www.hcltech.com/blogs/history-big-data